So I was reading Hacker News today and came across a article A sequel to SQL? An intro to Malloy, in which a near the top comment was

Try writing this SQL. Malloy writes SQL you can’t (I’m pretty sure of it).

https://twitter.com/lloydtabb/status/1556287859601985536

and at the time, there was no SQL responces written.. so as I read the Twitter thread, at no point was my brain like “this is too hard in SQL” and was rather dulled when I got to the auto-generated SQL,

with d1 as (

select

state

,name

,sum(number) as births

from test.public.use_1910_current

where year > 1990

group by 1,2

), name_pop as (

select

name

,state

,round((births*sum(births)over())/(sum(births)over(partition by state)*sum(births)over(partition by name)),3) as popularity_ratio

from d1

qualify births > 1000

), top_ten as (

select *, name||'('||popularity_ratio||')' as formatted

from name_pop

qualify row_number() over (partition by state order by popularity_ratio desc) <= 10

)

select state

,listagg(formatted, ', ') within group(order by popularity_ratio desc) as by_name_list_detail

from top_ten

group by 1

order by 1 limit 6;| STATE | BY_NAME_LIST_DETAIL |

|---|---|

| AK | James(1.727), Robert(1.627), Logan(1.611), Hannah(1.551), Samuel(1.541), Tyler(1.518), Benjamin(1.484), John(1.466), Ethan(1.453), William(1.443) |

| AL | Brantley(3.458), Braxton(3.185), Mary(3.060), Raven(2.734), Chandler(2.621), Allie(2.601), Ryleigh(2.573), Kameron(2.554), Jaylen(2.352), Whitney(2.336) |

| AR | Braxton(3.054), Dalton(2.850), Bentley(2.725), Hayden(2.430), Dakota(2.375), Clayton(2.355), Dustin(2.324), Brooklyn(2.137), Shelby(2.089), Payton(2.066) |

| AZ | Notnamed(47.491), Ramon(3.489), Tatum(3.408), Jesus(3.397), Francisco(3.145), Ernesto(2.970), Manuel(2.911), Adriel(2.852), Ruben(2.605), Ximena(2.598) |

| CA | Azucena(4.215), Xitlali(4.180), Joseluis(4.052), Xochitl(4.045), Miguelangel(3.894), Jocelyne(3.850), Lupita(3.839), Viridiana(3.791), Juancarlos(3.738), Arman(3.717) |

| CO | Aspen(5.148), Angelo(2.248), Quinn(1.879), Ezekiel(1.837), Rowan(1.833), Dillon(1.817), Everett(1.797), Emerson(1.752), Elias(1.688), Tanner(1.681) |

Anyways getting the data file from the github Malloy project

Loading parquet data files into Snowflake via the legacy WebUI

Making a new table with the data pulled from the variant column:

create or replace table test.public.use_1910_current as

select

raw:state::text as state

,raw:name::text as name

,raw:number::int as number

,raw:year::int as year

from test.public.name_data;At some point doing fixed point math of integers runs into the default 6dp default for division in Snowflake, thus you should rewrite the fraction of fractions as is reciprocal and multiplied form because 106.250 is not 98.857, and even if you do swap to doubles, you should always multiply verse divide first as you get better numerical stability:

select

column1 as a,

column2 as b,

column3 as c,

column4 as d,

round((a/b)/(c/d),3) as r1,

round((a/b)*(d/c),3) as r2,

round((a*d)/(b*c),3) as r3

from values

(1,2,3,4),

(1108,2606253,1375,319731698);| A | B | C | D | R1 | R2 | R3 |

|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 0.667 | 0.667 | 0.667 |

| 1108 | 2606253 | 1375 | 319731698 | 106.250 | 98.826 | 98.857 |

So transcribing the generated SQL into working Snowflake SQL:

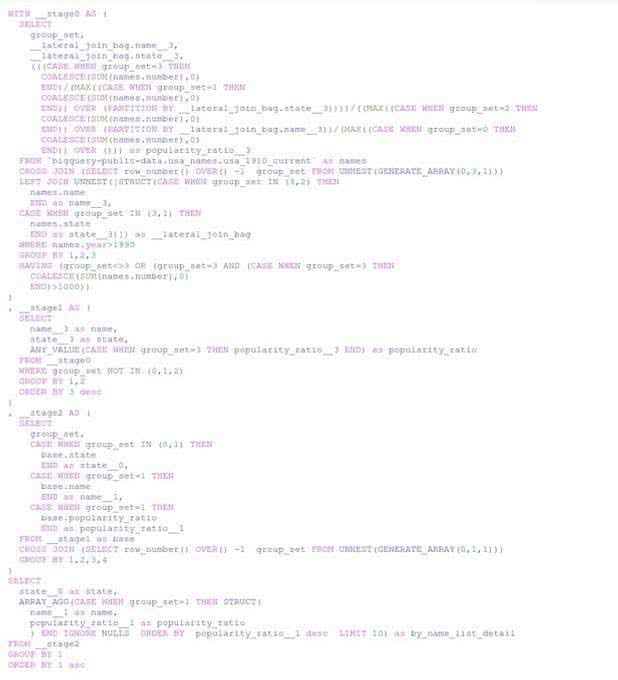

with __stage0 as (

select

group_set,

__lateral_join_bag.name__3,

__lateral_join_bag.state__3,

iff(group_set=3, coalesce(sum(names.number),0), null) as a,

max( iff(group_set=1, coalesce(sum(names.number),0), null) ) over (partition by __lateral_join_bag.state__3) as b,

max( iff(group_set=2, coalesce(sum(names.number),0), null) ) over (partition by __lateral_join_bag.name__3) as c,

max( iff(group_set=0, coalesce(sum(names.number),0), null) ) over() as d,

(a/b) / (c/d) as popularity_ratio__3

from test.public.use_1910_current as names

cross join (

select

row_number() over(order by null) -1 as group_set

from table(generator(ROWCOUNT =>4))

//select column1 as group_set from values (0),(1),(2),(3)

) as x

,lateral (

select

case when x.group_set in(3,2) then names.name end as name__3,

case when group_set in(3,1) then names.state end as state__3

) as __lateral_join_bag

where names.year>1990

group by 1,2,3

having (group_set<>3 or (group_set=3 and (case when group_set=3 then coalesce(sum(names.number),0)end)>1000))

)

,__stage1 as (

select name__3 as name,

state__3 as state,

round(any_value(case when group_set=3 then popularity_ratio__3 end),3) as popularity_ratio

from __stage0

where group_set not in (0,1,2)

group by 1,2

qualify row_number() over(partition by state order by popularity_ratio desc) <= 10

order by 3 desc

)

,__stage2 as (

select group_set,

case when group_set in (0,1) then

base.state

end as state__0,

case when group_set=1 then

base.name

end as name__1,

case when group_set=1 then

base.popularity_ratio

end as popularity_ratio__1

from __stage1 as base

cross join(select

row_number() over(order by null) -1 as group_set

from table(generator(ROWCOUNT =>2))) as x

group by 1,2,3,4

)

select

state__0 as state,

listagg(case when group_set=1 then name__1||'('||popularity_ratio__1||')' end, ', ') within group (order by popularity_ratio__1 desc) as by_name_list_detail

from __stage2

group by 1

order by 1 asc;There are some things that should be changed about this code, the order by 3 desc in __stage1 is a obvious waste of processing. But once you look at that, you notice __stage2 is joining to itself twice and then in the final select only taking one of those sets of results.

so the latter half of the code can be written:

,__stage1 as (

select name__3 as name,

state__3 as state,

round(any_value(case when group_set=3 then popularity_ratio__3 end),3) as popularity_ratio

from __stage0

where group_set not in (0,1,2)

group by 1,2

qualify row_number() over(partition by state order by popularity_ratio desc) <= 10

--order by 3 desc

)

select

state,

listagg(name||'('||popularity_ratio||')', ', ') within group (order by popularity_ratio desc) as by_name_list_detail

from __stage1

group by 1

order by 1 asc;[July 2023]

10 months later, there was more to this post, but time has moved on… so this appears to just be old ramblings.. nothing new perhaps. Anyways many people rewrote the SQL nicely on HackerNews, so that is nice to see.